Muhao Chen joined the Cognitive Computation Group in 2019 as a postdoctoral researcher, and he still collaborates with us from time to time.

He is currently an Assistant Professor in the Department of Computer Science at UC Davis. He directs the Language Understanding and Knowledge Acquisition (LUKA) Lab. Muhao’s research focuses on robust and minimally supervised data-driven machine learning for Natural Language Processing. Most recently, his group’s research has been focusing on accountability and security problems of large language models and multi-modal language models. Previously, Muhao was a Postdoctoral Fellow at UPenn, from 2019 to 2020. He received his Ph.D. degree from the Department of Computer Science at UCLA in 2019. Before joining UCLA as a Ph.D. student, he graduated with a Bachelor degree from Fudan University in 2014.

Hi, Muhao! Glad to catch up with you. Please tell me where you’re living and what you’re up to these days.

I’m living in Sacramento — the capital city of California. As usual, aside from working, I still enjoy traveling (especially driving to different places for road trips). Luckily, from Sac or the Bay Area it is easy to reach most places in the country through direct flights or driving.

That’s excellent! I’m glad you’ve been getting to travel.

What are the most rewarding things about your current work?

What I feel most rewarding is to have had a well-established NLP group since I started to work as a faculty member. All students have done excellent jobs building strong academic records of their own. Over a year ago, the first batch of PhD students graduated and have been very successful researchers in the industry. A few more are upcoming and are looking for (or will soon be looking for) faculty positions (wish them the best of luck!). Hope one day, the group can be as successful as CCG and have a lot of successful alumni.

The most surprising? And yes, best of luck to them!

Most of my group members have pets (mostly cats; as we list in one of the sections here https://luka-group.github.io/people.html). I recently got my own pets (two Roborovski hamsters):

They’re very sweet. I like that the nonhumans get special recognition in your lab. (-:

How connected is your work now with what you did in our group?

NLP has been moving way too fast nowadays. But quite a few things we’ve done recently, especially those related to LLM reasoning and indirect supervision, are closely related to what I did at CCG. In fact, I still collaborate with Dan and other (past or current) CCG members like Ben, Wenpeng, Haoyu, Hongming, and Qiang on these topics. We have been giving tutorials every year since 2020 about this research.

What new development(s) in the field of NLP are you excited about right now?

Our group has been focusing on machine learning robustness since it was founded. Particularly, we recently have been very interested in safety issues of LLMs. We build systems that automatically identify safety issues of LLMs, safeguard LLMs from malicious use, and protect LLMs from threats and vulnerabilities when they interact with complex environments. This area is particularly important nowadays considering that LLMs are becoming backbones of more and more intelligent systems and are starting to handle thousands of tasks in real world.

I’m glad to hear you’re focusing on safety issues as LLMs grow.

Thoughts about the state of AI?

This is an exciting time where AI researchers are building larger and larger learning-based systems and not only solve daily-life problems, but even help with frontier scientific discovery in many other fields like biology, medicine, chemistry, food science, etc. On the one hand, it is a good time for us to work with other fields of study on many scenarios where AI can contribute its force. On the other hand, it is an important time where academia should collaborate more closely with the industry as the AI systems we seek to build recently require significantly more computing and data resources.

How are things outside of work?

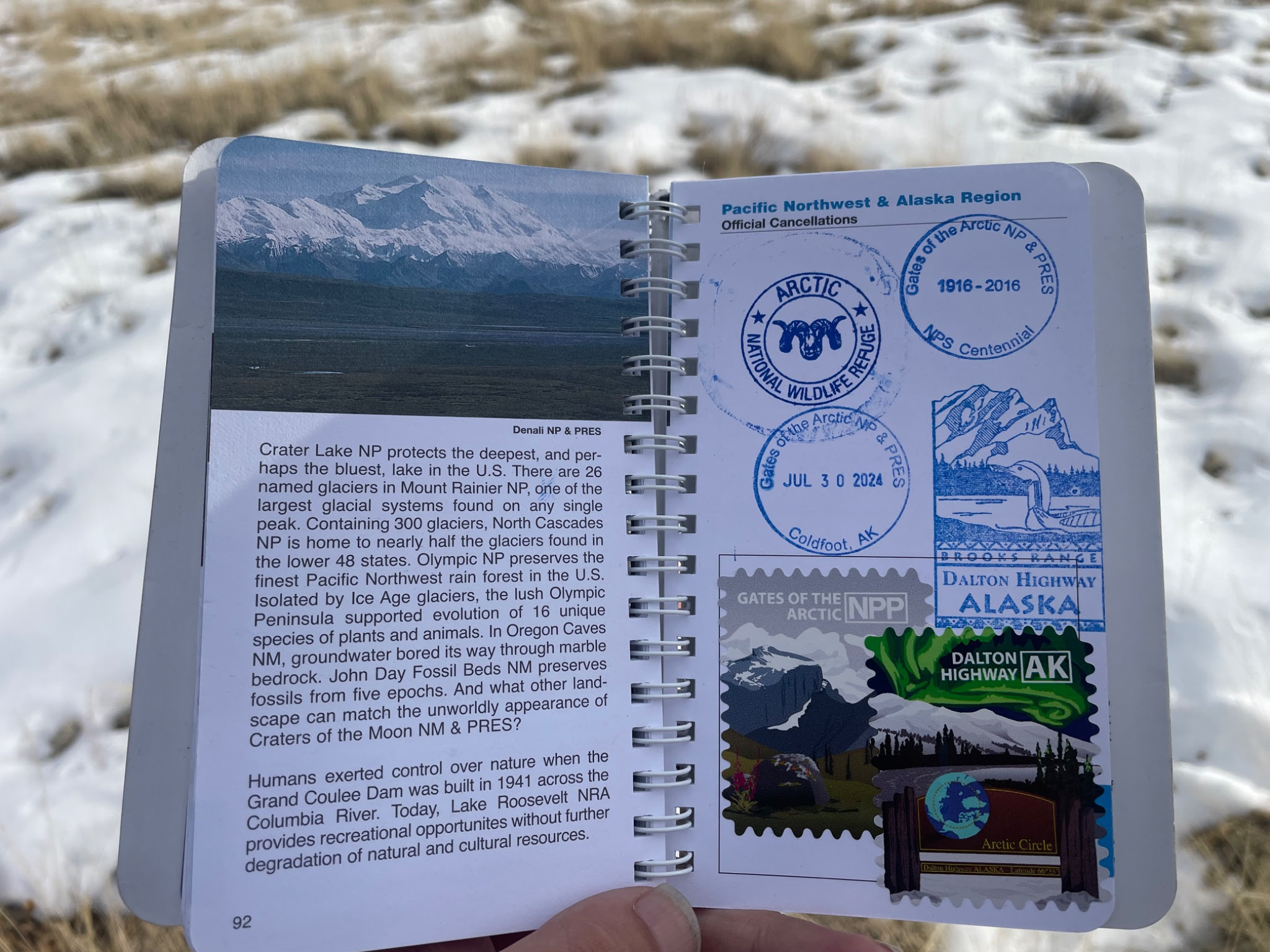

I just finished my checklist for traveling to all the national parks in US. Last summer I drove the Dalton Highway to reach the Gates of the Arctic.

Congratulations! That’s fantastic. So, how many national parks have you visited? And which have made a particular impression on you?

I’ve been to 56 national parks (only counting real “National Parks”, and not national monuments or national historical parks etc. though I’ve been to many of these as well). There are 7 national parks I still haven’t been to (3 in Alaska, 1 island in California, 1 island in Florida, and 1 in American Samoa and 1 in Virgin Islands) because all these need to be reached by air taxis or cruise ships, while I just finished all that can be reached by driving. I really love the national park system in the US because almost every one of them is different from each other, with many unique scenes to see and roads to drive on.

Favorite park: if one, then definitely Yellowstone that stands out from all the rest. But I’ve been asked to pick my top 5 in the past and I eventually picked the top 6: Yellowstone (WY), Death Valley (CA), Arches (UT), Carlsbad Cavern (NM), Redwood (CA), Badlands (SD).

Excellent! Do you have a memory to share from your time with the group?

It was campus lock-down time in 2020, but a few of us had hotpot every Friday at my apartment. In fact, a few of us still spent time together in the 3401 Walnut building during the lock-down. A lot of fun happened during that time. There were times where we stayed late in the building before the paper deadline. There were also times where we brought game consoles to play in the room where Dan used to host his group meeting. Most of them have graduated now (except for Haoyu).

Any advice for the current students and postdocs in the group?

One important thing I learned from Dan is to develop a good research taste. Doing meaningful research is not about publishing more and more papers. In fact, only the first paper, the best paper, and probably also the last paper about a topic are the memorable ones.

Thanks for this insight. And thank you so much for this interview!

For more information on Muhao Chen’s research at UC Davis, please visit his website.