This summer, for the first time in a few years, we had a substantial group of summer interns working with us, mentored by PhD student Sihao Chen and postdoc Vivek Gupta. Some were visiting from universities as far away as Utah and California, but the group also included a Penn undergrad and a local high schooler. We were honored to work with them and incorporate them into our research work. As the summer term winds up, I’ve asked for their thoughts on the experience and what they learned and enjoyed here!

Harshavardhan Kalalbandi

Master’s student at the University of California, Riverside

My internship at Prof. Dan Roth’s Cognitive Computation Group has been an incredible journey. During the first few weeks I spent time going through many research papers to formulate a good problem. I found a keen interest in temporal QA on tables, where the tables can be represented in various ways. We started by establishing baselines using existing reasoning methods. We proposed a dynamic reasoning approach, allowing the model to have flexibility in formulating its approach. Initially, we tried solving the problem through simple prompting, but when that proved insufficient, we explored alternative approaches.

Our efforts led us to develop a multi-agent approach and a fine-tuning method for our system. A significant challenge has been keeping pace with the rapid advancements in state-of-the-art NLP research. A highlight of this experience was meeting Prof. Dan Roth and his incredible team, which was both inspiring and fun. Dr. Vivek Gupta’s mentorship and expertise was very helpful in seeing through this work. I also explored the incredible campus of Penn, went around Philadelphia, and traveled to New York City and DC during the weekends. It was a great fun experience where I learned a lot, met incredible people, and enjoyed myself.

Kushagra Dixit

Master’s student at the University of Utah

This summer, I had the amazing opportunity to intern with the Cognitive Computation Group at the University of Pennsylvania. During my time here, I worked on two fascinating domains: improving the temporal understanding of large language models on semi-structured data and exploring the mechanisms behind in-context learning in LLMs. These projects allowed me to delve deep into some of the most exciting trends in NLP research, providing invaluable experience that I will carry forward in my career.

One of the most enjoyable aspects of this internship has been the vibrant and collaborative environment. I’ve had the pleasure of interacting with PhD students in the lab, sharing ideas with fellow interns, and receiving invaluable guidance from Dr. Vivek Gupta. Every discussion with Professor Dan Roth has been particularly special. His insights and expertise have consistently challenged and inspired me, leaving a profound impact on my approach to research. I will leave this internship not only with a wealth of new knowledge but also with cherished memories of the fun and engaging moments shared with the team.

In terms of skills, I’ve significantly sharpened my programming abilities, but more importantly, I’ve learned how to drive a research project from inception to completion. Engaging with so many experienced researchers has provided me with numerous opportunities to understand their thought processes, which has been a critical learning experience. As I move forward, I am excited to continue my engagement with CogComp, building on the strong foundation this internship has provided, and contributing further to NLP research.

Mike Zhou

Undergrad at the University of Pennsylvania

Hi! My name is Mike and I’m currently a rising senior at Penn. This summer, I’ve had the pleasure to be a part of the cognitive computation lab, where I’ve been focusing on language model reasoning and understanding. My current projects involve exploring large language models’ abilities to reason, and whether their reasoning comes from a form of deduction or simply from semantic associations. My day-to-day routine in the lab involves keeping up with literature, designing experiments that I’ll run, and talking with other lab mates to give and gain insights on each other’s projects. Overall, I’d say that I’ve learned quite a bit about and gained a good number of insights on language models this summer, whether it was from my work, peers, or Professor Roth.

Yanzhen Shen

Undergrad at the University of Illinois Urbana-Champaign

Hi! I’m Yanzhen Shen, an undergraduate student at the University of Illinois at Urbana-Champaign. This summer, I had the privilege of conducting research at the University of Pennsylvania under Professor Dan Roth and my PhD mentor, Sihao Chen. During this experience, I worked on cutting-edge research in Information Retrieval and gained a deeper understanding of the qualities of a good researcher.

Our project focused on improving retrieval systems, particularly for complex queries. While current systems handle simple queries effectively, they struggle with ones with logical operators, such as “Orchids of Indonesia and Malaysia but not Thailand.” To address this, we are using text embedding models to better interpret logical operators like AND, OR, and NOT in complex queries. Our goal was to push the boundaries of how query and document dense embeddings can represent complex information.

Technically, I became proficient in dual encoder training frameworks and data processing for Information Retrieval tasks.

More importantly, discussions with Professor Roth helped me view our ideas from broader perspectives. For example, he continued to encourage me to think about how our model differs from existing dense retrieval models and other retrieval systems. In addition, working closely with Sihao also gave me insights into how a senior PhD student approaches and resolves challenges in complex research problems. We also engaged in paper discussions, where he taught me to think about critical questions when reading papers, such as “What makes a good researcher question?” and “ Is this work influential?”

Atharv Kulkarni

Undergrad at the University of Utah

This summer, I had the incredible opportunity to intern at the University of Pennsylvania’s Cognitive Computation group under the guidance of Professor Dan Roth. The main focus of my internship was to enhance the capabilities of language models for complex reasoning tasks using neurosymbolic approaches. I created a question-answer dataset based on the Wikipedia information of Olympic sport athletes and used it to evaluate state-of-the-art language models. This involved integrating Wikipedia data into a database and developing SQL queries to create a large synthetic dataset. I discovered that models often struggle with temporal reasoning tasks, which led to productive discussions with Professor Roth about using neurosymbolic techniques to improve reasoning performance. By refining models with fine-tuning and prompt engineering, I made significant progress in enhancing language models’ ability to transform natural language questions into SQL queries.

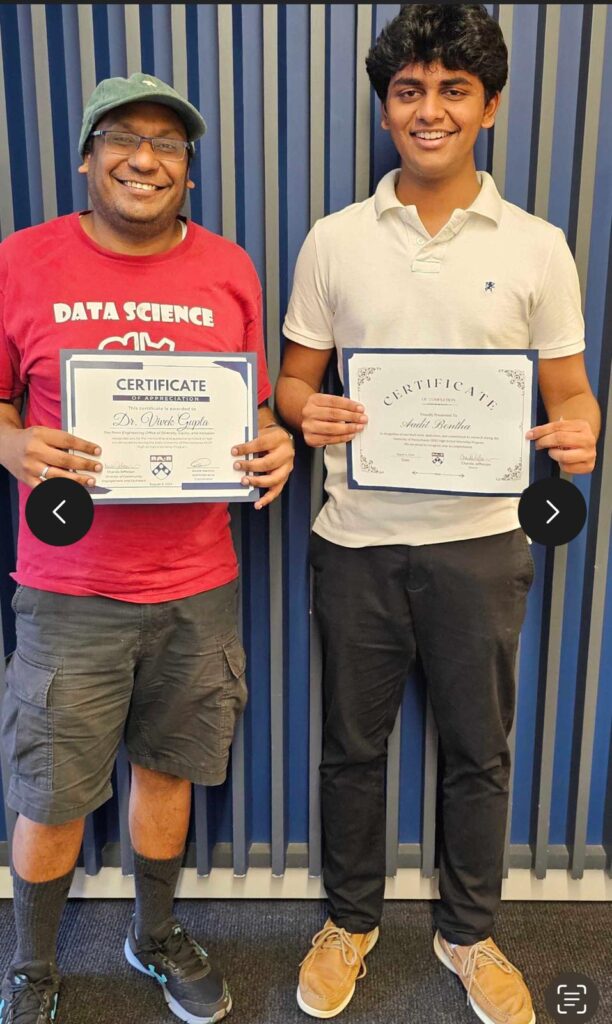

Beyond the technical work, Professor Roth’s mentorship was invaluable. His insightful feedback and guidance helped me develop skills in fine-tuning models and utilizing various APIs, significantly advancing my understanding of the field. His expertise provided me with a deeper appreciation for the nuances of research and inspired me to think critically about complex problems. Mentoring Aadit Bontha, a high school student interning with our CogComp group, was a rewarding experience, offering me a fresh perspective on language models. Exploring Philadelphia’s iconic sites, such as City Hall and the Benjamin Franklin Museum, along with my peers, added to the memorable experiences of this summer. Overall, I gained a deeper understanding of research and thoroughly enjoyed collaborating with my peers. I am grateful to Professor Dan Roth and Dr. Vivek Gupta for this invaluable opportunity.

Aadit Bontha

HS Student at La Salle College High School

During my internship at UPenn, I had the opportunity to work on a project centered around large language models (LLMs) and their ability to extract and organize data from various sources. My main task was to explore how these models could generate structured answers, like tables, from diverse data inputs—a challenge that required both technical skill and a deep understanding of AI.

I started by working with the TANQ dataset, which includes a vast collection of question-answer pairs. My role involved extracting relevant information and using LLMs to generate structured outputs. This required extensive coding in Python, utilizing tools like BeautifulSoup for web scraping and the JSON module for data parsing.

One of the most interesting aspects of the internship was experimenting with different AI models, such as GPT-3.5 and Gemini 1.5. Through this, I gained insights into how these models perform in various scenarios—GPT-3.5 excels at handling complex queries, while Gemini 1.5 is more effective with simpler, structured tasks.

I also focused on refining the prompts we used to optimize model performance. This involved understanding how to craft questions that would elicit the most accurate and relevant responses from the models. Overall, the internship was a highly educational experience that enhanced my skills in AI and data processing, providing me with valuable insights into the practical applications of these technologies.