The 2024 Annual Meeting of the Association for Computational Linguistics (ACL) is underway in Bangkok! We’re excited to share the work that’s being presented and published from CCG and our collaborating authors. You can find links to our ACL papers below!

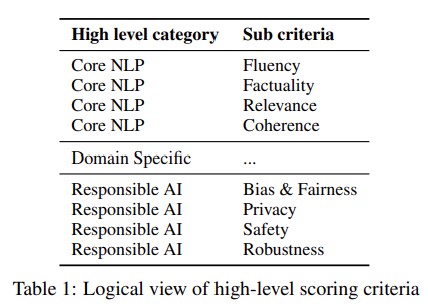

ConSiDERS-The-Human Evaluation Framework: Rethinking Human Evaluation for Generative Large Language Models

In this position paper, we argue that human evaluation of generative large language models (LLMs) should be a multidisciplinary undertaking that draws upon insights from disciplines such as user experience research and human behavioral psychology to ensure that the experimental design and results are reliable. To design an effective human evaluation system in the age of generative NLP, we propose the ConSiDERS-The-Human evaluation framework, consisting of 6 pillars — Consistency, Scoring Criteria, Differentiating, User Experience, Responsible, and Scalability.

Aparna Elangovan, Ling Liu, Lei Xu, Sravan Bodapati, and Dan Roth, ConSiDERS-The-Human Evaluation Framework: Rethinking Human Evaluation for Generative Large Language Models (ACL 2024)

Winner of the Outstanding Paper Award at the ACL2024 Workshop on Knowledgeable LMs

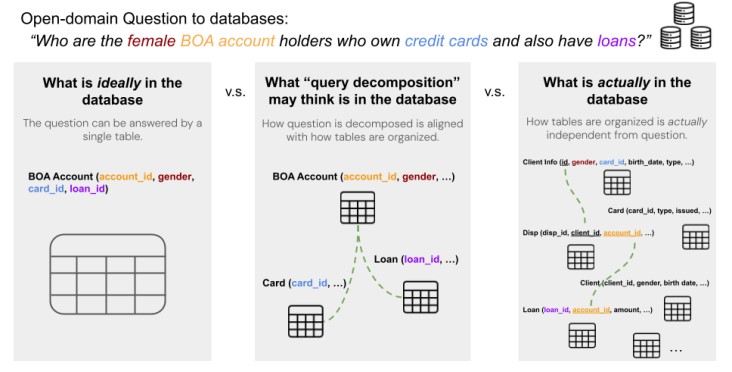

Is Table Retrieval a Solved Problem? Exploring Join-Aware Multi-Table Retrieval

Retrieving relevant tables containing the necessary information to accurately answer a given question over tables is critical to open-domain question-answering (QA) systems. However, many questions require retrieving multiple tables and joining them through a join plan that cannot be discerned from the user query itself. In this paper, we introduce a method that uncovers useful join relations for any query and database during table retrieval. We use a novel re-ranking method formulated as a mixed-integer program that considers not only table-query relevance but also table-table relevance that requires inferring join relationships.

Peter Baile Chen, Yi Zhang, and Dan Roth, Is Table Retrieval a Solved Problem? Exploring Join-Aware Multi-Table Retrieval (ACL 2024)

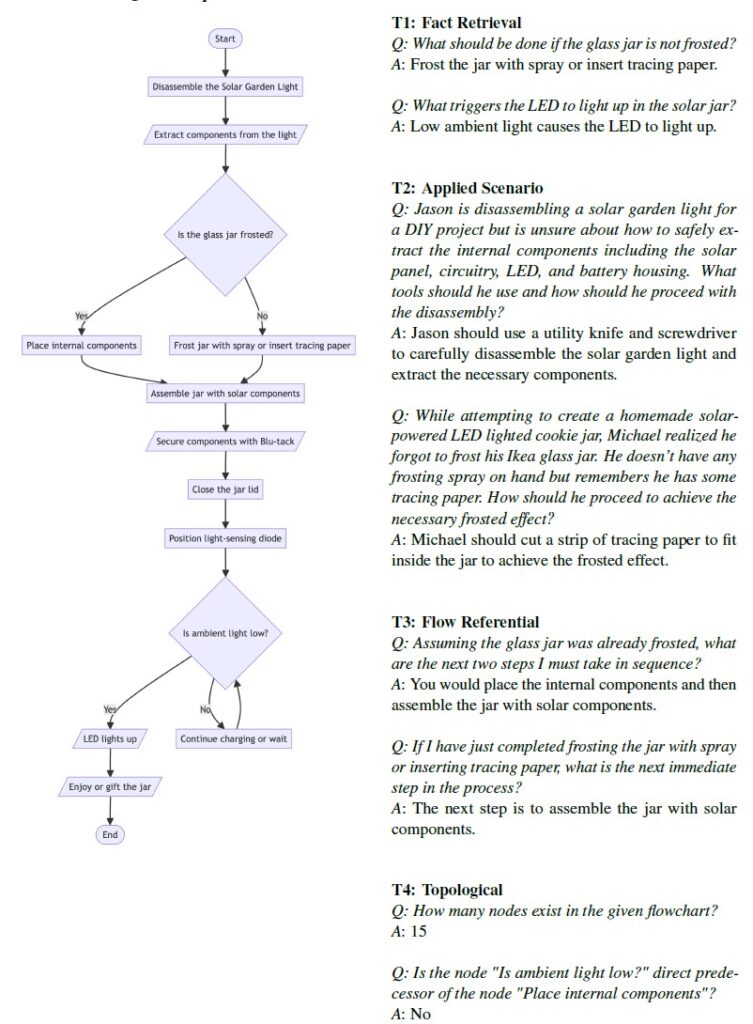

FlowVQA: Mapping Multimodal Logic in Visual Question Answering with Flowcharts

This paper introduces FlowVQA to overcome the shortcomings of existing visual question answering benchmarks in visual grounding and spatial reasoning. FlowVQA features 2,272 flowchart images and 22,413 question-answer pairs to evaluate tasks like information localization, decision-making, and logical reasoning. The evaluation of various multimodal models highlights FlowVQA’s potential to advance multimodal modelling and improve visual and logical reasoning skills.

Shubhankar Singh, Purvi Chaurasia, Yerram Varun, Pranshu Pandya, Vatsal Gupta, Vivek Gupta, and Dan Roth, FlowVQA: Mapping Multimodal Logic in Visual Question Answering with Flowcharts (ACL-Findings 2024)

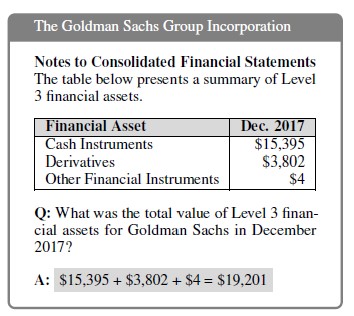

Evaluating LLMs’ Mathematical Reasoning in Financial Document Question Answering

In this paper, we assess LLM robustness in complex mathematical reasoning with financial tabular datasets, revealing that LLMs struggle with increasing table and question complexity, especially with multiple arithmetic steps and hierarchical tables. The new EEDP technique enhances LLM accuracy and robustness by improving domain knowledge, extracting relevant information, decomposing complex questions, and performing separate calculations.

Pragya Srivastava, Manuj Malik, Vivek Gupta, Tanuja Ganu, and Dan Roth, Evaluating LLMs’ Mathematical Reasoning in Financial Document Question Answering (ACL-Findings 2024)